Through my efforts to install the Stanford Alpaca system on my M1 mac studio that I dubbed homeGPT, I discovered that some of the required packages are configured to only use CUDA … in other words I need an NVIDIA graphics card.

One of my research computers from school is a Windows 11 system with a nice NVIDIA graphics card, the GeForce GT 1030. So I am blogging the process of getting Python, LLaMA, and Alpaca setup on that system in this post. I’m dubbing this “officeGPT” since it’s one of my Samford research computers.

Python and PyTorch

I currently haven’t done any Python development in Windows, so this blog is documenting the process of getting PyTorch installed and configured on Windows 11.

Step 1: Get python on the system … I opted for the all-in-one miniconda installer for Windows:

https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe

Step 2: Install the PyTorch packages

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

Step 3: hello, cuda

import torch

print(torch.zeros(5).to("cuda"))Running the program above produced the following:

tensor([0., 0., 0., 0., 0.], device='cuda:0')Success! It appear miniconda installed pytorch with cuda support enabled correctly.

To LLaMA or not to LLaMA, that is the question

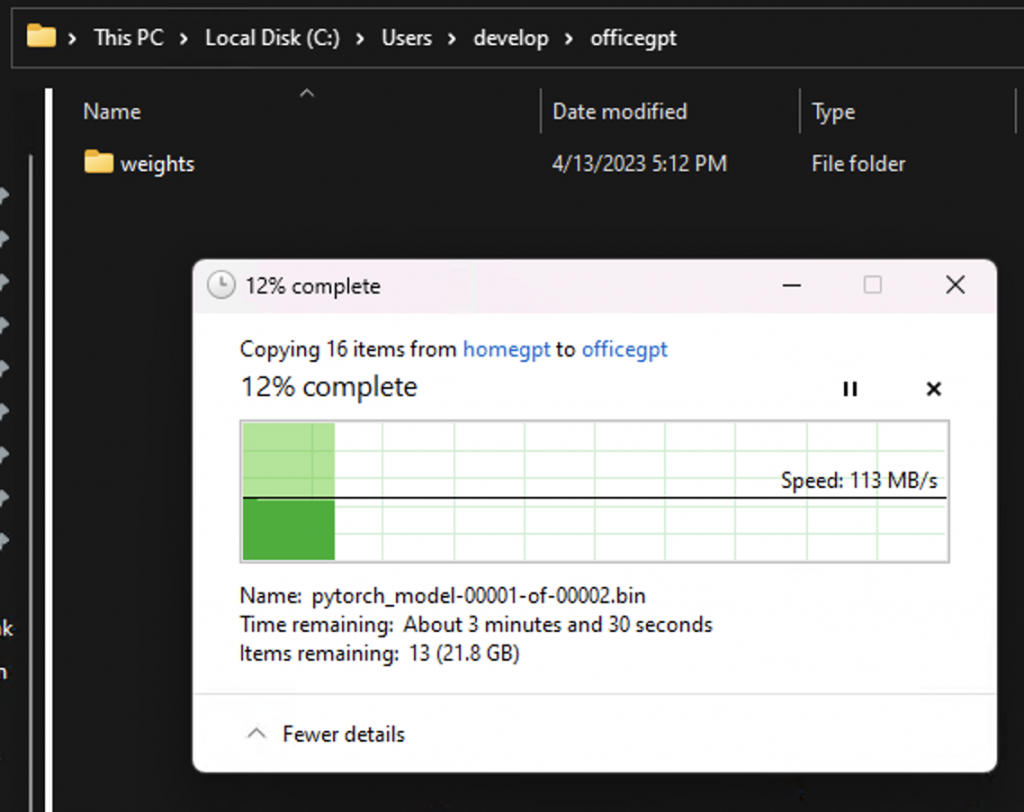

Technically, I don’t need the llama repo with example scripts if I’m going to be using Alpaca. I only need the LLaMA 7B pre-trained model. It needs to be converted to the huggingface/transformers format, which I’ve already done on my mac. So, theoretically, I can skip the llama setup and just copy the converted model over to the Windows system.

Alpaca

https://github.com/tatsu-lab/stanford_alpaca

Problem: no git command line client installed.

Solution: install git-scm for windows

pip install -r requirements.txt

git clone “https://github.com/huggingface/transformers”

cd transformers

pip install -e .

ok, whew lots happening … still got nccl error, thankfully works with gloo but have to hack into hf/transformers/training_args.py and force the backend to gloo

a few more other fixes (mainly with command line arguments) and i got it to run and load the llama model … but new errors:

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 774.00 MiB (GPU 0; 2.00 GiB total capacity; 1.51 GiB already allocated; 0 bytes free; 1.52 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmen

This was not “fixable”. Another dead end. This error means you need a bunch of dedicated GPU memory to do this (I saw someone posting a minimum of 12GB whereas mine only has 2GB dedicated GPU memory). I’m going to try with “cpu” only (no cuda) as I can always leave it running for a week or two!

Shoot, this did not work either as there are some parts of the python based implementation that still depend on CUDA, even if you set the “no_cuda” flag to true. It’s possible that there is another flag that needs to be set, or it may be possible to hack some of the code or required packages, but I’m going to consider it a dead end, especially since…

Alternative – alpaca cpp version runs on m1/m2

Runs on my mac studio when compiled from source: https://github.com/antimatter15/alpaca.cpp

Also contains the “alpaca-fied” fine-tuned llama7b model but somehow trimmed to only 4b params.