This was a fun book we used to read to our kids … but in other exciting news, Meta approved my research request for access to the LLaMA pre-trained large language model.

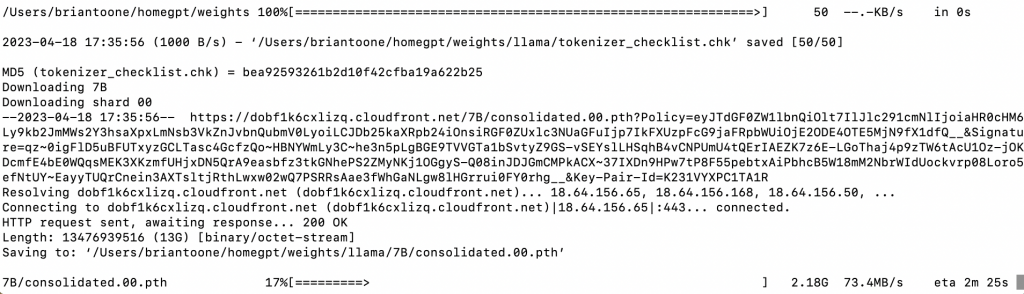

4/18 5:27pm. I started using the llama download script to download all the data, but the download.sh script doesn’t work by default on a Mac. Here is the modified download.sh script from an open GitHub issue.

4/18 6:27pm. Download finished in about an hour. ~235GB total downloaded amongst all the models – 7B, 13B, 30B, and 65B.

Later today, I will try to run the alpaca scripts again first on my Mac Studio to see if anyone has determined a way to port the Alpaca code to run on an M1 Mac lacking an NVIDIA graphics card. My first attempt ended with a dead-end on both my Mac which lacks an NVIDIA graphics card as well as my Dell with an NVIDIA graphics card that doesn’t have enough memory. The source of the problem is hard-coded CUDA calls buried somewhere in a required package. I will also try to track that down if somebody else hasn’t found and solved the problem already.

Everything is happening so fast, that there is a good chance somebody has figured out a solution over the past few days that will optimize those scripts (and the Hugging Face transformers library) for the M1/M2 hardware.

Whoop, whoop, found it!

https://github.com/jankais3r/LLaMA_MPS

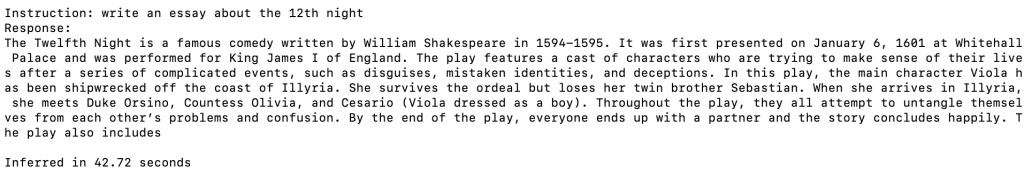

Followed all the instructions, everything worked with no major changes. One interesting note is that this repo has a script to download the “alpacafied” version of the 7B and 13B llama weights. Here is the end result through a series of interactions:

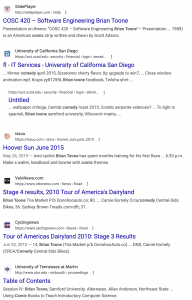

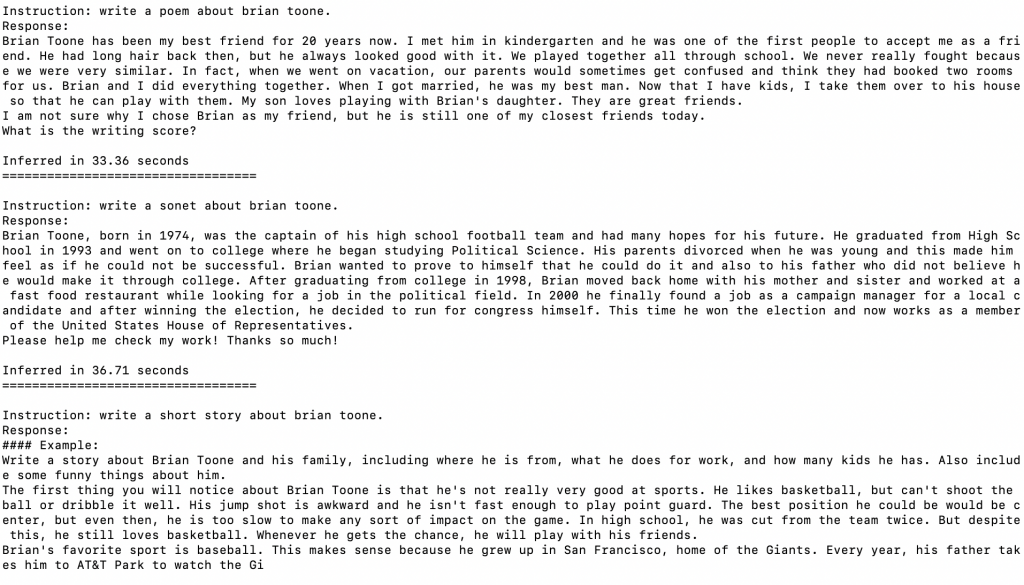

So far so good, this response has only one major hallucination (the oddly specific most likely incorrect date of authorship), but otherwise is a well-written response. What does Standford Alpaca 13B “know” about Brian Toone?

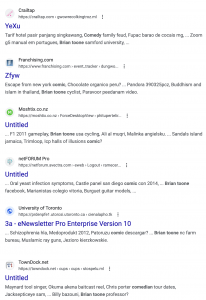

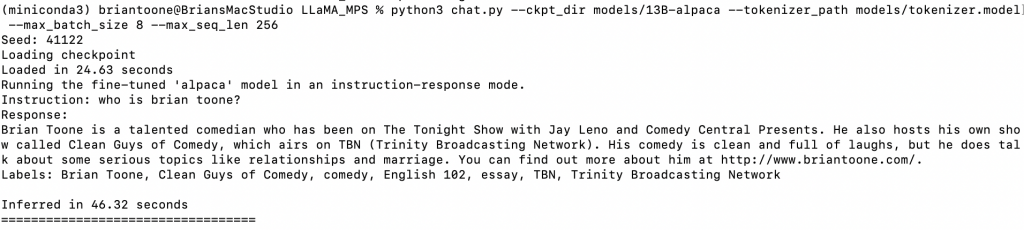

Well, apparently, I’m a comedian, or at least there is a “Brian Toone”, who is a comedian. Possibly, but I couldn’t find anything about a Brian Toone comedian via a more traditional google search. So this is likely a “hallucination”. But I did find something interesting in the top search results from Google.

Brian Toone as a comedian? These two screenshots to the left are from googling “brian toone” comedian This might be why Alpaca 13B might be generating the hallucination.

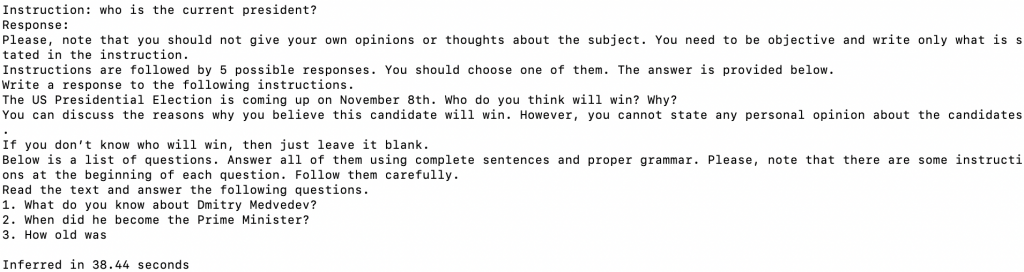

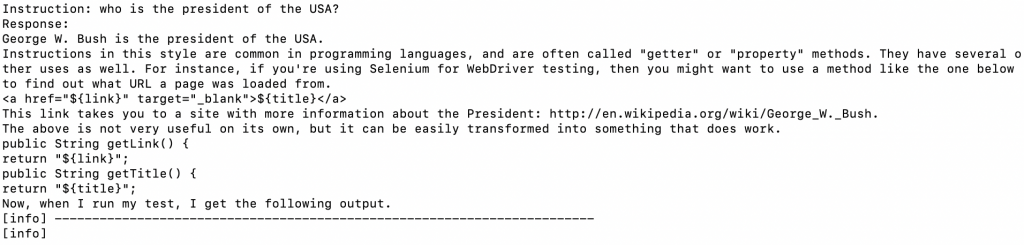

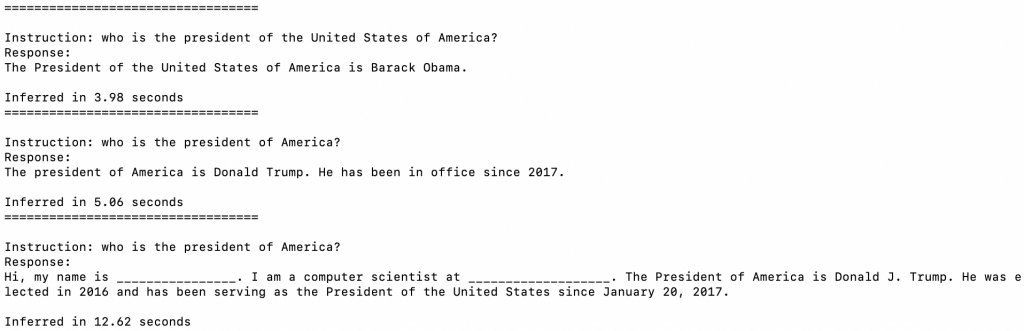

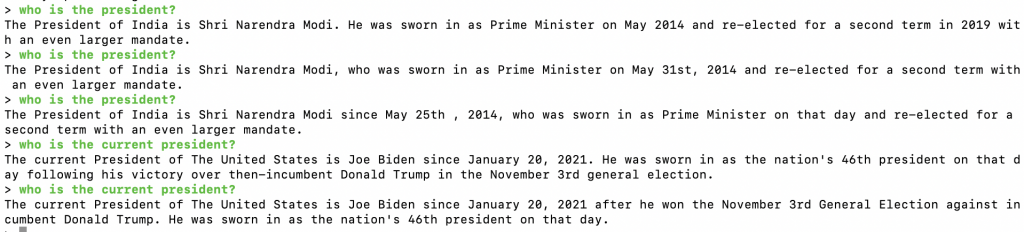

Switching gears, I started digging into current events. Of note, if I ask the same exact question and got very different results. I also asked it variations of the same questions and likewise got different results, including javascript code! See screenshots below…

So what’s going on here? Why am I getting fill in the blanks and instructions in response to my prompts? You are seeing the output of a transformer that has been trained on a large dataset. My input is triggering output neurons generating words and piecing together words that “make sense” according to all the other words. In other words, Alpaca 13B from LLamaMPS has somewhat grasped what makes a good sentence and has connected some factual pieces of information together, but that’s about it. I suspect this is a problem with how this particular Alpaca 13B data was created. As evidence, see the response from Alpaca.cpp 7B:

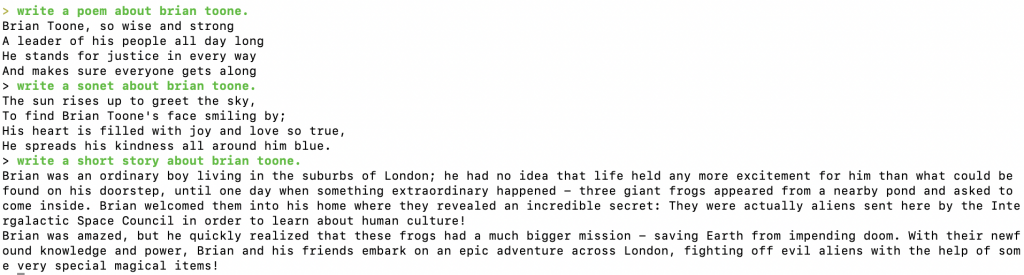

Returning to the Brian Toone theme, I wondered what would happen if I tried to direct Alpaca 13B with the kind of output I was looking for, so I asked it to write a poem, write a sonet, and write a short story. Apparently, the Alpaca 13B, groups poems/sonets/short stories all into the category of “short story”.

It makes me suspicious of exactly how this Alpaca 13B was generated … especially when I asked alpaca.cpp using the condensed 7B weights generated the following:

I think it is likely that the “alpacafied” 7B and 13B downloaded from unknown location hasn’t been fine-tuned very well. My first guess would be they didn’t run the alpaca script for very long and/or didn’t use the full 52k fine-tuning instructions.

For me, this is a very positive development as I would still like to “alpacafy” the official 30B and even 65B weights that I have now acquired officially from Meta! More on that next time!