The computer science department is launching a brand new concentration in computer engineering. You can now get a computer science degree with your transcript marked as having a concentration in computer engineering. This is a great way to get your “foot in the door” at a hardware company or any position requiring expertise in how… Continue reading Computer Engineering at Samford University

Category: Research

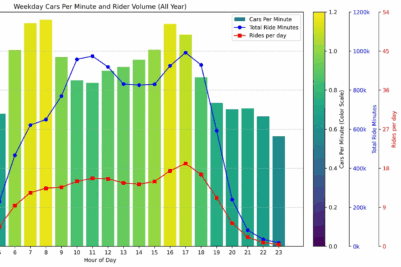

mybiketraffic speed analysis

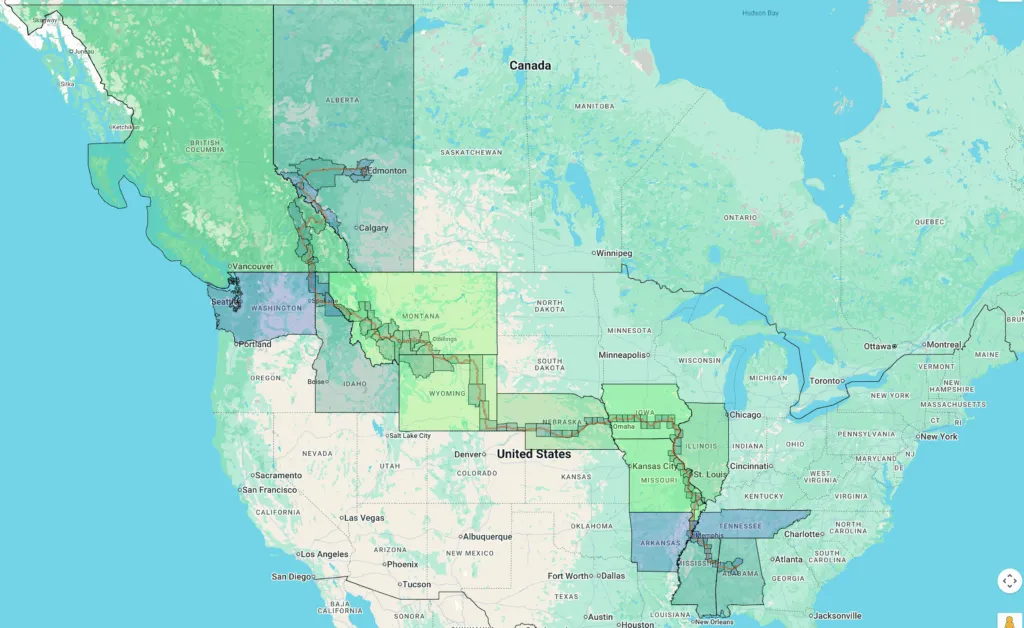

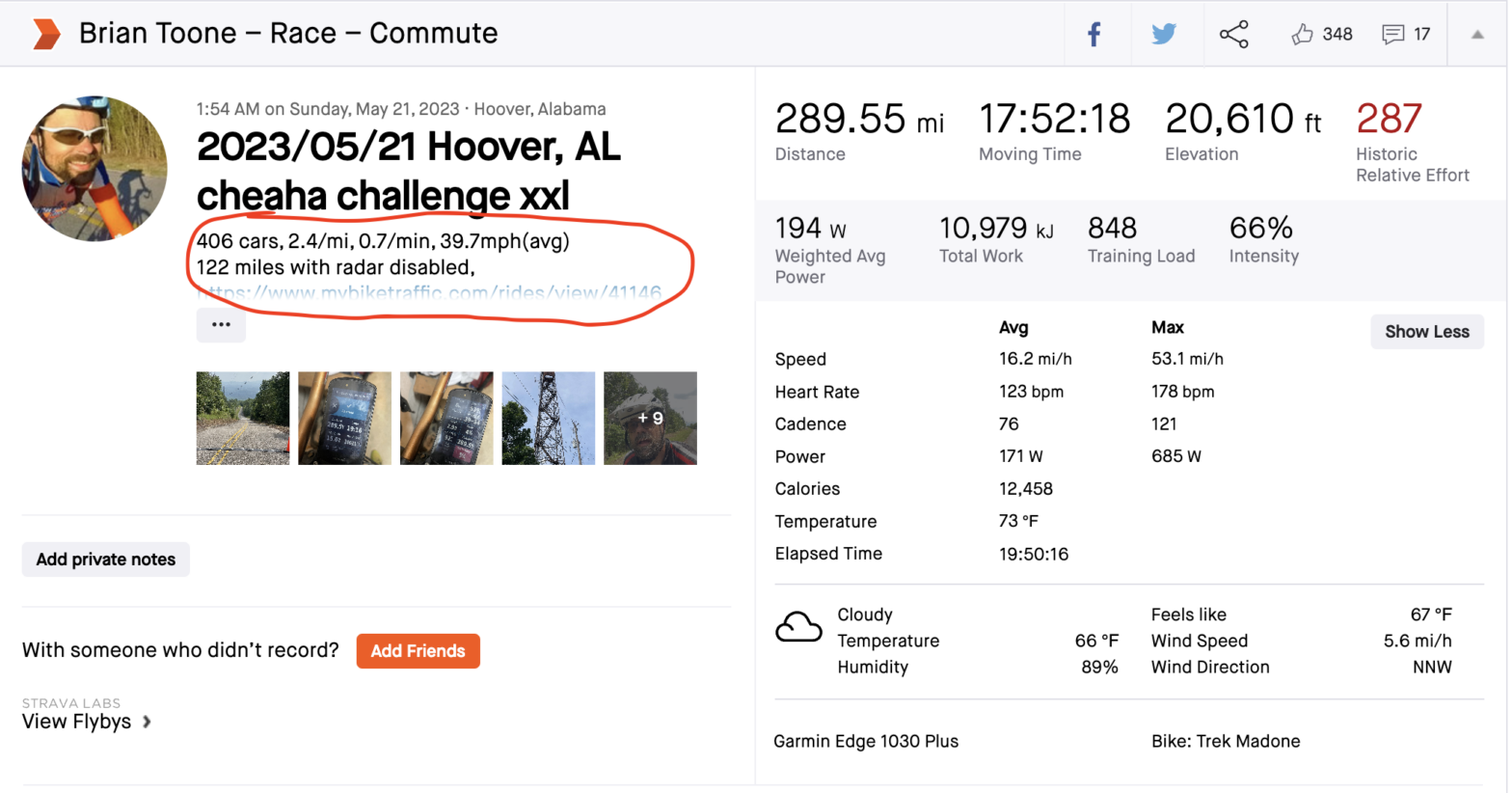

The map above shows my 3,757 mile route home from Canada highlighting states and counties. https://mybiketraffic.substack.com Check out my most recent substack post where I analyze the traffic data I collected on my 3,757 mile bike ride home to Alabama from Edmonton, Canada.

mybiketraffic time of day analysis

I’ve started a research newsletter on substack separate from my work blog to keep my users updated on the results of this project. Check out my latest post by clicking the image or link below!

I’m thinking…

I’ve been experimenting with Google Gemini code generation. Similar to ChatGPT, one of the new features is to see a dump of the “thinking” process. Presumably each character output in the “thinking” process is appended to the original prompt and re-run through the transformer? I’m going to research this more, as my speciality is neural… Continue reading I’m thinking…

Samford Family Academy Online Workshop

Had a great time presenting my overview of AI technology and its impacts. I got a bit too carried away with the technical details, so I didn’t quite make it to all the impacts AI is having on society. See the latter slides in the powerpoint below – Many thanks to all who joined in… Continue reading Samford Family Academy Online Workshop

Samford Homecoming Lecture: What in the world is happening in AI?

I gave a lecture today as part of the homecoming activities on the current impact of AI in both our world and in education. Going to write up more about it later, but it went well! Here are the powerpoint slides: And here is a link to the facebook live video: https://www.facebook.com/brian.toone/videos/1534532750722315 If the Facebook… Continue reading Samford Homecoming Lecture: What in the world is happening in AI?

a tale of complexity

Let me tell you a tale – a tale of magic and power. Ok, maybe there isn’t any real magic to this other than the magic described by Arthur Clarke in this quote: “Any sufficiently advanced technology is indistinguishable from magic.” My reference to power is in the sense of what you as a third… Continue reading a tale of complexity

chatGPT code hallucinations

By this point, many people understand the concept of an AI “hallucination”. This is the term that has come to describe incorrect information stated as facts in the output of a chatGPT prompt. For example, if you ask “Who is Brian Toone?” to chatGPT, you get the response below which has some correct information, but… Continue reading chatGPT code hallucinations

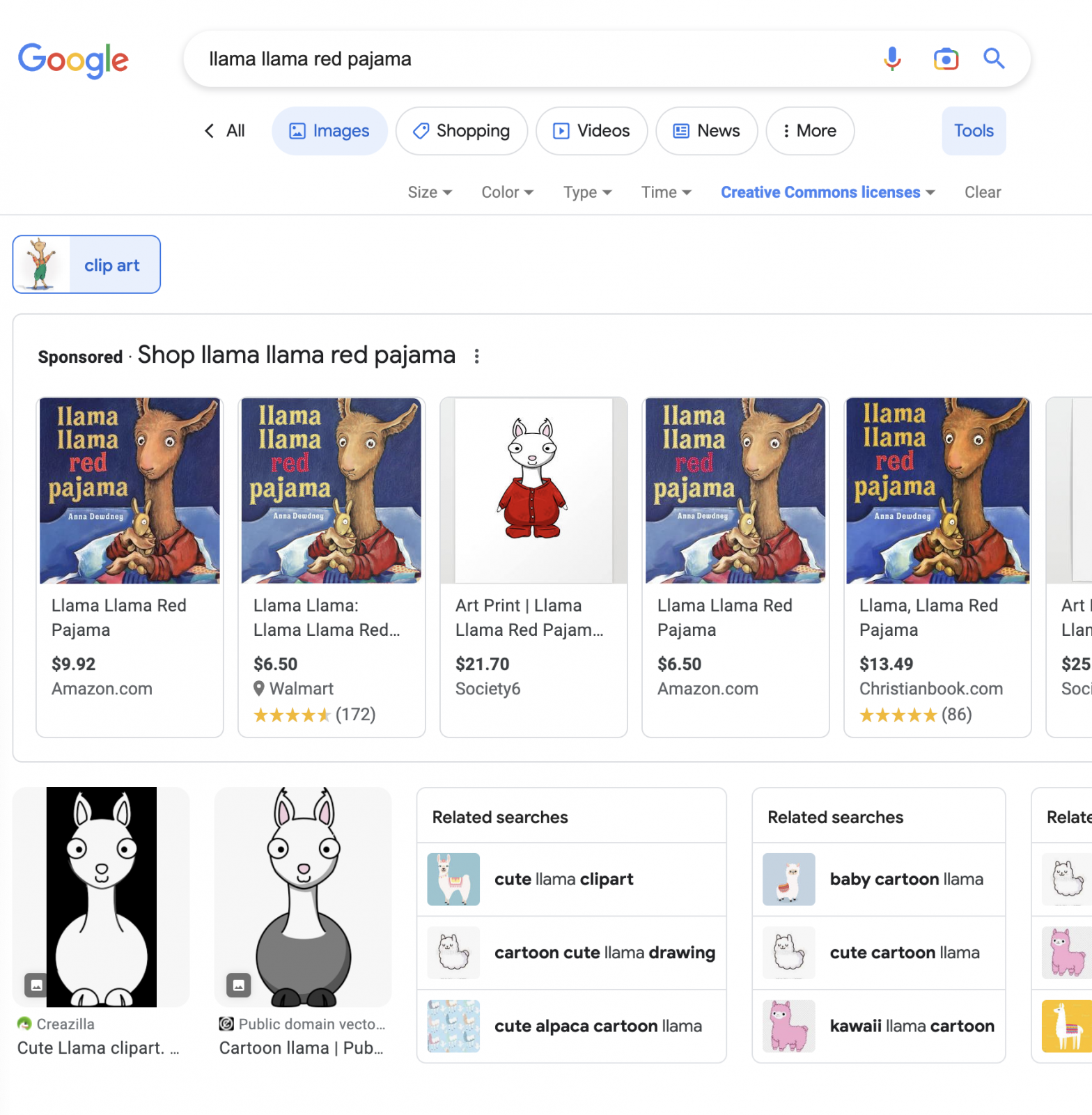

Llama, Llama! (Red Pajama)

This was a fun book we used to read to our kids … but in other exciting news, Meta approved my research request for access to the LLaMA pre-trained large language model. 4/18 5:27pm. I started using the llama download script to download all the data, but the download.sh script doesn’t work by default on… Continue reading Llama, Llama! (Red Pajama)

Win11 officeGPT

Through my efforts to install the Stanford Alpaca system on my M1 mac studio that I dubbed homeGPT, I discovered that some of the required packages are configured to only use CUDA … in other words I need an NVIDIA graphics card. One of my research computers from school is a Windows 11 system with… Continue reading Win11 officeGPT