I’m still waiting to hear back from Meta (Facebook) about my application for the llama dataset. I can install all the tools, but the pre-trained model which took months to train is behind an application form. It was leaked online through 4chan with someone then submitting a GitHub pull request to update the AI research page to include the torrent link for downloading the model replacing the application form.

By the time I found it, the torrent link was dead. I’d rather obtain official approval and the model directly from Meta in any case, but unfortunately, I still haven’t heard back from my application form. Even though Meta issued a follow-up press release after the leak indicating they were still going to continue granting access to researchers … Well, I’m waiting!

I wonder if this open letter sent out yesterday will slow things down:

Related: the “dangerous” years of driving immediately after cars started to become widely available – https://www.detroitnews.com/story/news/local/michigan-history/2015/04/26/auto-traffic-history-detroit/26312107/

In the meantime, I’ve been experimenting with ChatGPT as a programming tool. Specifically, to save time to generate GANs in my AI class. Here’s how that has gone…

- “can you create a gan using keras”

- “your answer wasn’t finished … can you continue your answer?”

- “can you add code to display the generated images every 10 epochs side-by-side with a random real image?”

- “your answer didn’t finish. can you just start with the for loop?”

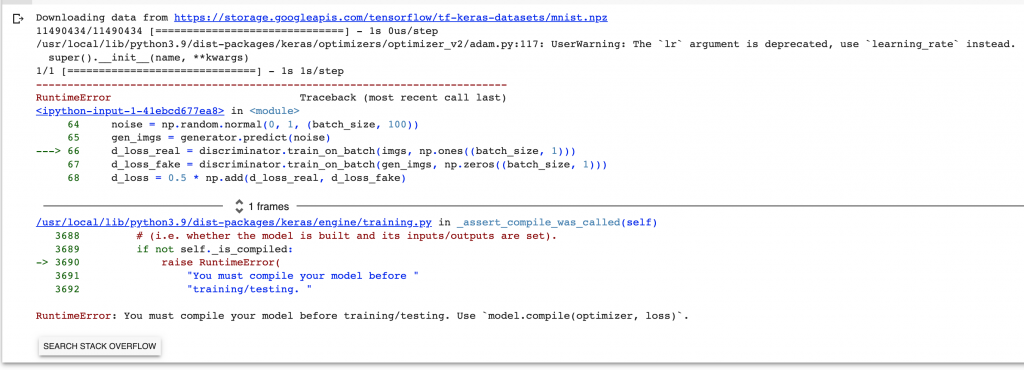

After ALL of that, I had the complete code, but it still had a couple errors:

- It forgot to compile the discriminator it had created.

- It had a “typo” (if you can call it that) on a print statement … see screenshots of the error/fixes below.

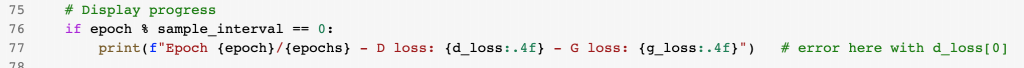

After adding the compile line and fixing the typo, it ran and showed me the losses. But I wanted to see the code that was being generated. So…

- “can you add code to display the generated images every 10 epochs side-by-side with a random real image?”

- “your answer didn’t finish. can you just start with the for loop?”

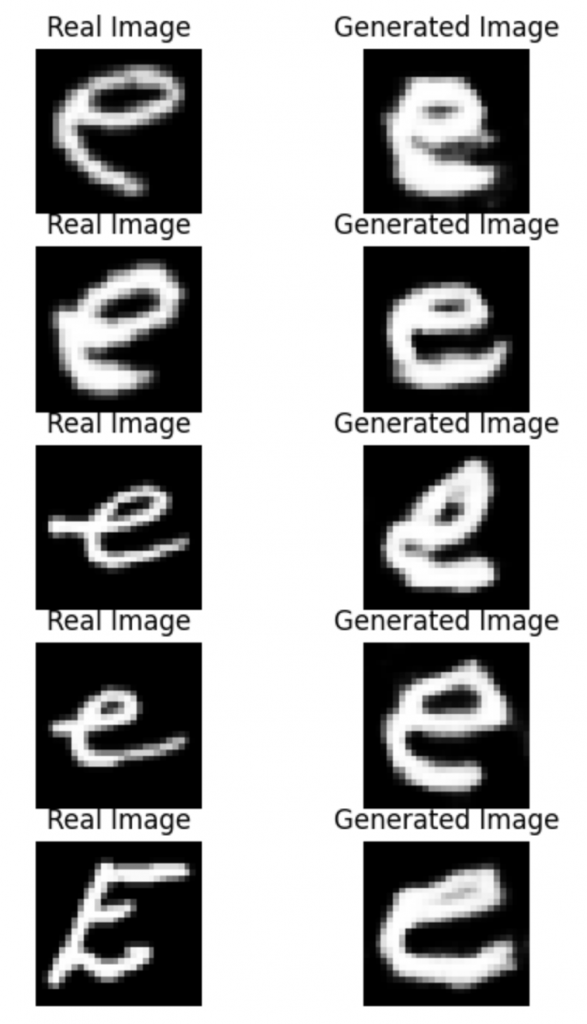

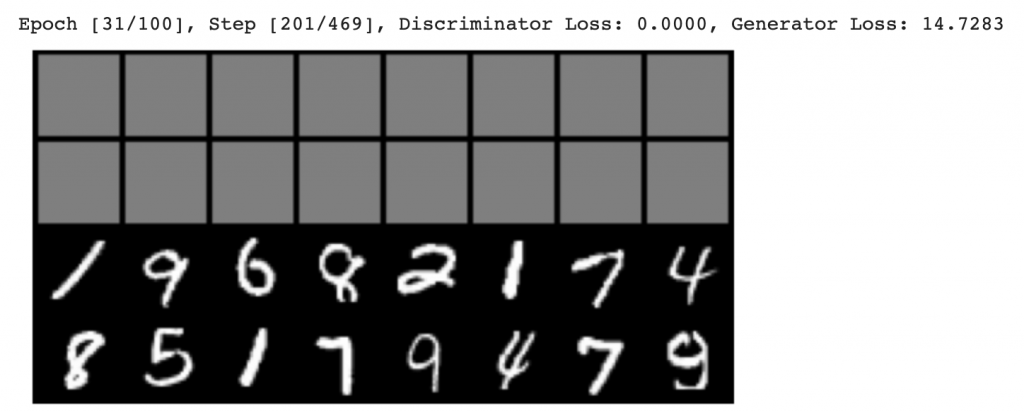

I updated the code and ran it again. Some decent results as shown above. I realized maybe it would be better to have a few sample generated images…

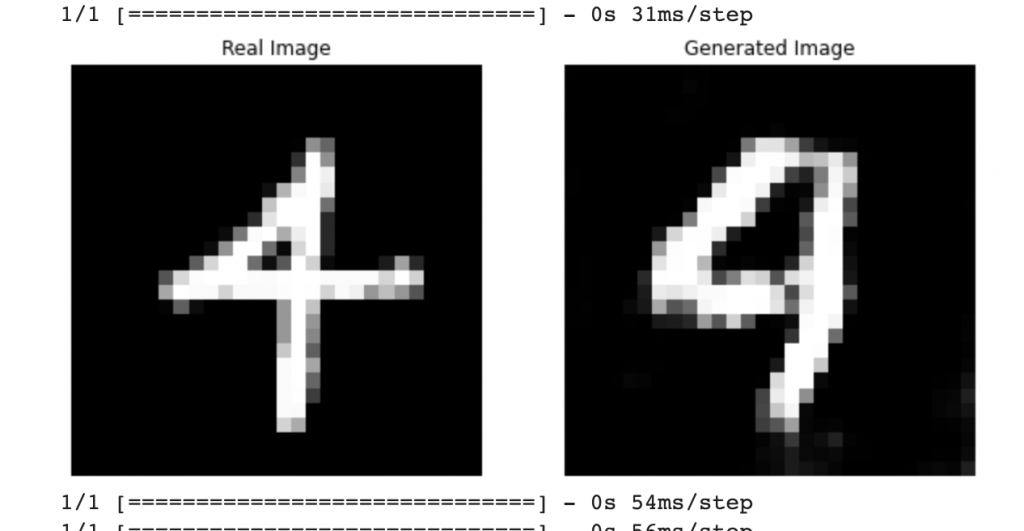

- “can you update the code to display five sample generated images instead of just one?”

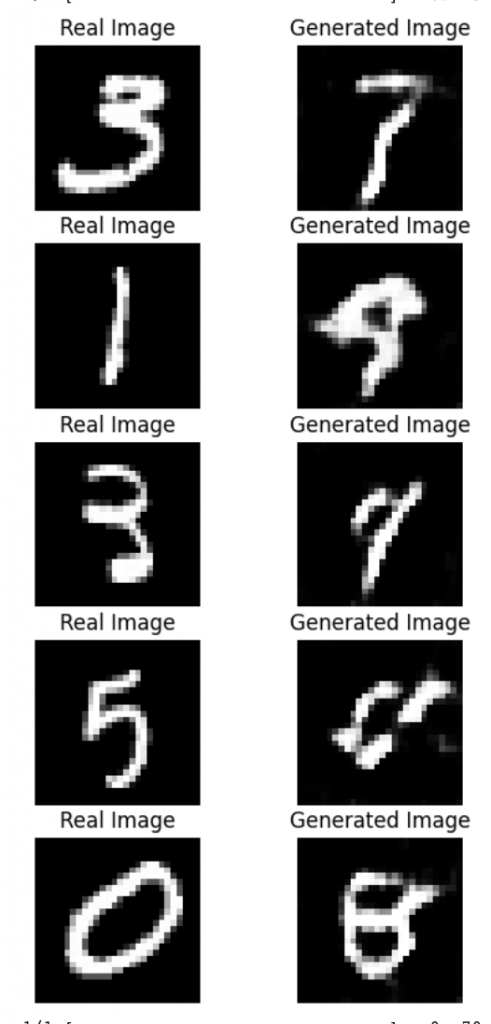

It had the same d_loss[0] error as before, but otherwise chatGPT output good pyplot code – although a little differently than I would have done it with five rows and two columns instead of one row and six columns. This allowed it to also sample five different real images, too, which is not what I was imagining. Here is the result:

OK, but not great. I wondered about the “compile” line that I added since you have to specify the learning rate, which is an important hyper-parameter for a neural network. I had used the same learning rate as the discriminator. So…

- “should the generator have the same learning rate as the discriminator?”

It’s not necessary for the generator to have the same learning rate as the discriminator. In fact, it’s common to use a lower learning rate for the generator than for the discriminator. This is because the generator needs to be more careful in updating its parameters, as small changes to the generator can have a big impact on the quality of the generated images. On the other hand, the discriminator needs to be updated more aggressively to keep up with the improving generator.

In practice, you may need to experiment with different learning rates for the generator and discriminator to find the best balance between stability and speed of convergence.

chatGPT response to my question

I have omitted all the code because I just copy/pasted the responses into a notebook here: gan-digits.ipynb

Next, the real experiment we had talked about in class was working with letters instead of digits. I randomly picked the letter “e” and said wouldn’t it be cool if we could train a GAN to generate some good “e’s”. So…

- “can you modify the code to use the emnist letters dataset? furthermore, can you change it to only train on the letter e?”

- “it still didn’t finish, can you start from the for loop?”

And within a fraction of a second, it started generating code … although the code had many more errors than before. I’m thinking the “emnist” prompt threw off the generation process.

Here’s the list of errors:

- It tried to import a dataset that didn’t exist without first installing an extra package. And even then, it was the wrong code.

- It omitted importing some modules and tried to import others from the wrong packages.

- It mistakenly thought “e” would be represented by “4”, but it’s represented by the label “5” in the emnist dataset.

- It badly butchered the code to display real and generated images such that it ended up displaying the same generated image five times even though it had generated five different images. Also it didn’t display any of the real images.

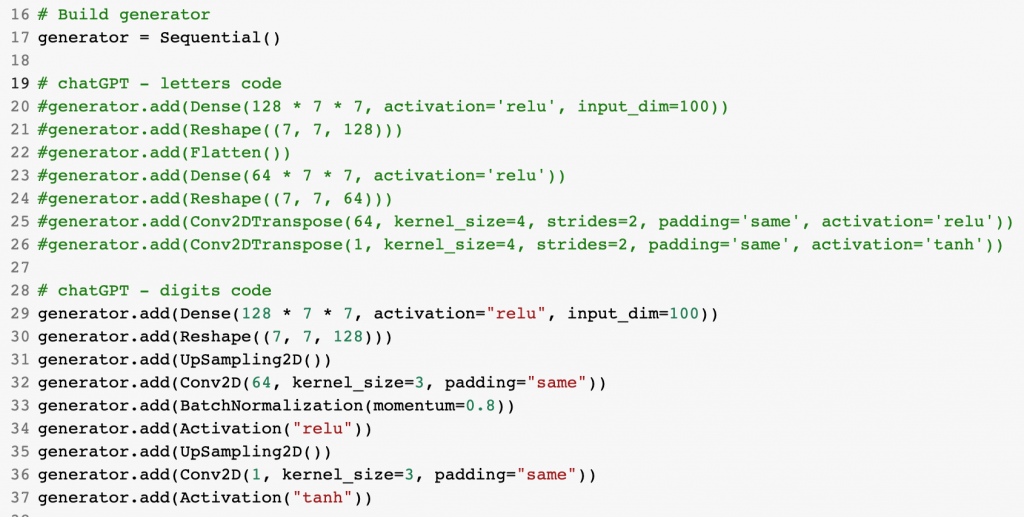

I fixed all the errors, though, and voila… but wait… all the generated images look the same still. The structure of the generator neural network is different, too. Is this new network supposed to work better with the “letters” dataset instead of “digits”? I copied the digits code and used it in place of the letters code and voila … see the comparison below. Note that I didn’t change any of the matplotlib code I had written (i.e., fixed from chatgpt) between the two runs so the fact that it’s showing different e’s now instead of the same e is because of the modified structure of the generator as documented by the third screenshot below. Instead I just used completely different generator code as documented by the third screenshot.

Here is the Google colab notebook: gan-letters.ipynb

Colab is great, but I also wanted to see the performance improvement I could get on my home Mac Studio. Currently, I have had better results with the pytorch framework instead of tensorflow/keras. So…

- “please give me an example gan using pytorch”

- “your code stopped before finishing, can you please finish it?”

- “it still didn’t finish! please try agian”

- “can you output the code assuming cuda is available? there is no need to check for it.”

- “can you just start with the part after you setup the discriminator and generator?”

Finally, it finished … but with lots of errors. The code would not run at all and as I started to pick off each error one-by-one, I ran out of time. So I decided to try again from scratch this morning…

- “create a gan using pytorch that generates letters”

- “your code didn’t finish, can you start with the for loop?”

- “Excellent can you add code after the loss statistics that displays sample generated images alongside real images from the training set?”

- “your code forgets to instantiate the generator and discriminator”

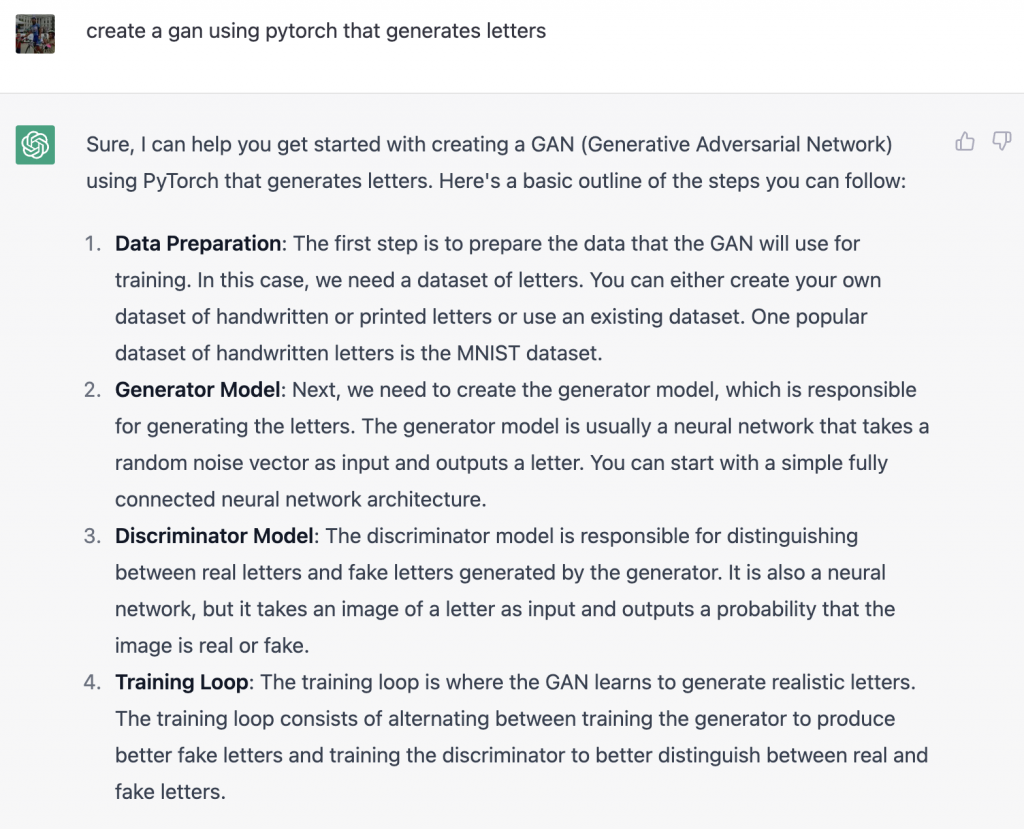

Completely different code, and this time it prefaced it with a nice “educational” explanation of how GANs work (screenshot below)

Errors:

- wrong dataset … it used the mnist digit dataset

- omitted the call to construct the generator and discriminator

- forgot to import make_grid from torchvision.utils even though it gave a long explanation about it

- forgot to import matplotlib.pyplot

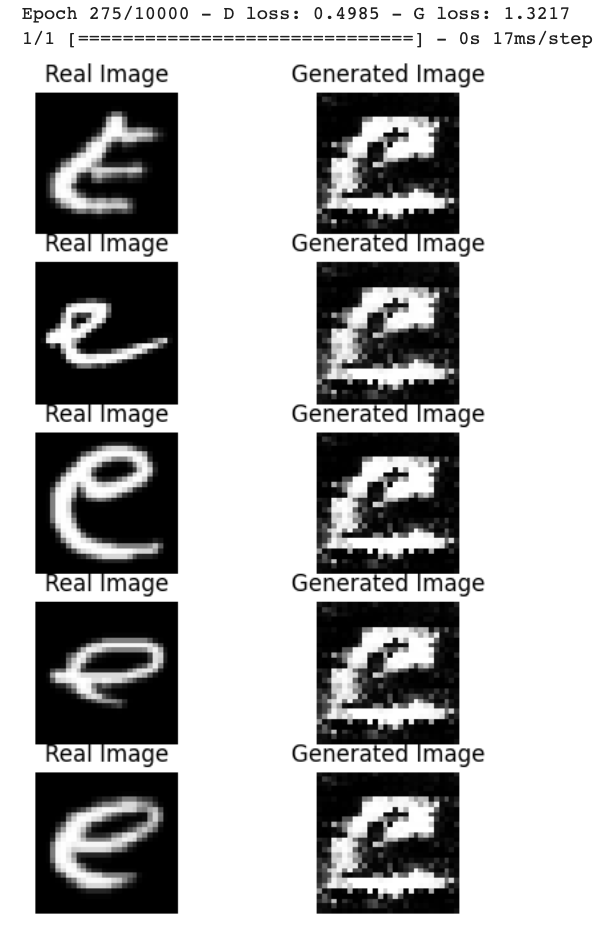

The results were awful, although the matplot layout with make_grid is pretty cool looking:

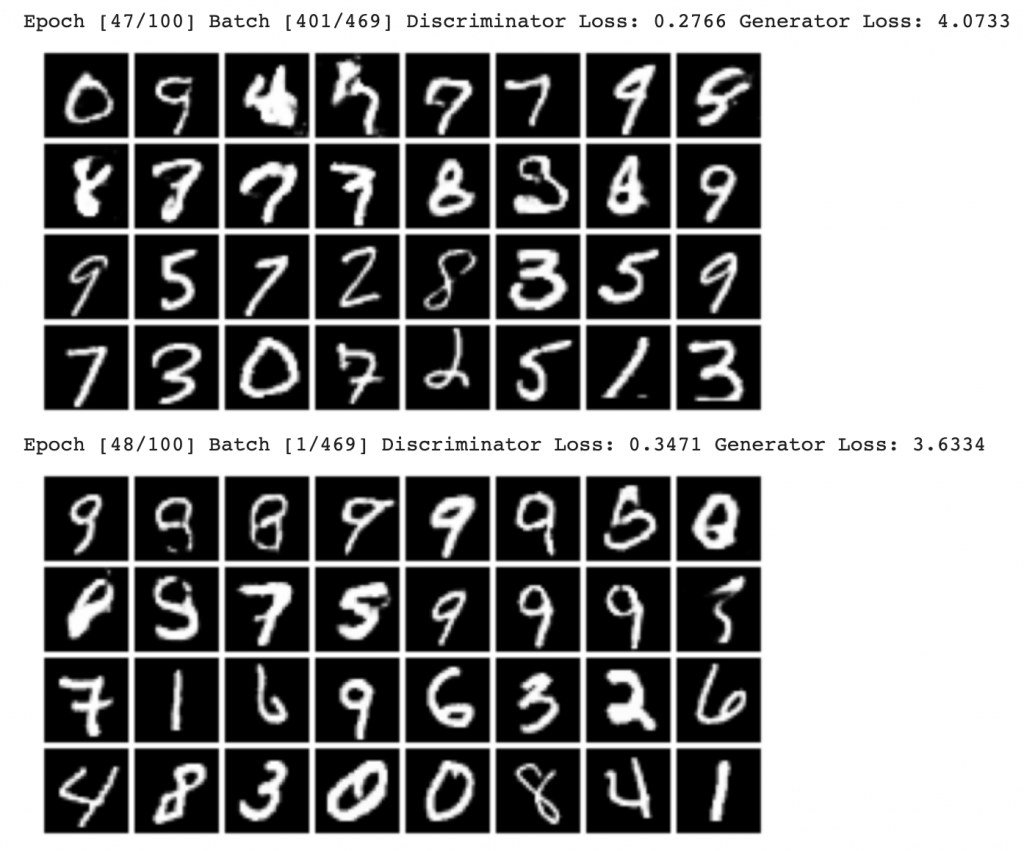

I like the “educational” nature and the linear, fully connected networks make it easier to understand. But in practice, you need to use convolutional neural networks to get meaningful output, so…

- “can you modify the generator and discriminator use convolutional neural networks?”

- “can you show me the training loop for the modified gan?”

The results were not bad at all, especially considering these were trained on ALL the digits:

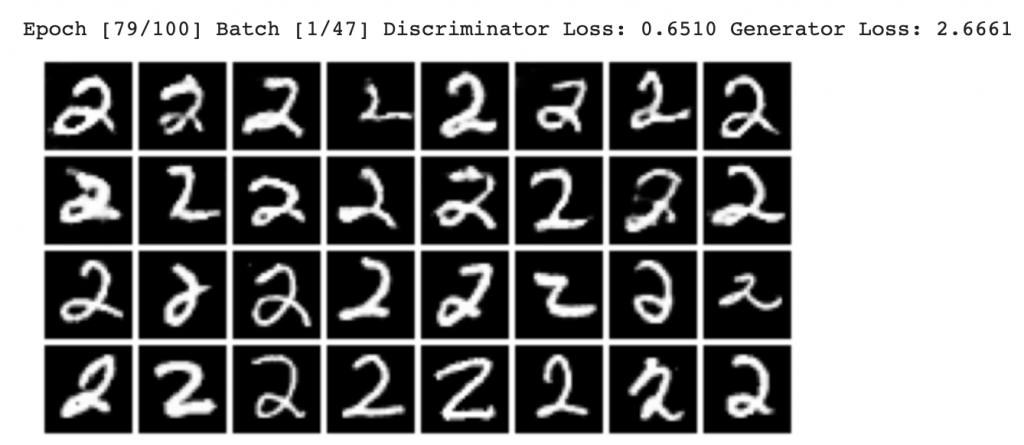

What if we just train it on 2s?

Interestingly, just by asking it to use convolutional neural networks, the output automatically fixed some of the errors it had before, e.g., missing imports were added! Plus it added code to resolve the shifted grayscale output from the generated images from the first network.

So why? Well, first, I’ve been personifying chatGPT quite a bit intentionally, but it’s quite debatable whether chatGPT “knows” anything at all. So what’s going on? When I prompted it to use convolutional neural networks for the generator and discriminator, the new prompt seeded the chatGPT LLM in such a way that it activated output from training samples with convolutional neural networks. Those training samples are going to be much better code and have many more examples because it is well know that image processing works better with convolutional neural networks, which is why the new output didn’t have as many errors.

To be clear, the LLM isn’t being retrained on our prompt, it’s just that our prompt has activated the part of the neural network associated with with “convolutional neural networks”, one of the trillions of tokens claimed to be in LLaMA (presumably a similar number in chatGPT).

As another way of looking at it, as well as to understand how GANs specifically work to create “deep fakes”, consider our silly made up example trying to generate just the letter “e”. If you train our GAN using digits, then you aren’t going to get an “e”. Even if you train the GAN using letters, then you still are very unlikely to get an “e”. But if you only train the GAN using instances of hand-written e’s (especially if you could improve the dataset to only have lower-case e’s), then you are going to get a fairly convincing “e”.

What about that anonymous handwritten letter (e.g., ransom note) you want to generate? Well, consider training 26 different GANs on each of the possible letters. Then segment your “prompt” into individual letters and activate the appropriate network based on each letter and, boom, the output is your anonymous ransom note.

There are a few examples of this already online:

https://arxiv.org/abs/1308.0850

TLDR – Conclusions

First, chatGPT has a hard time finishing a longer code sample. It’s possible that it’s either time or character-limited for the free account. This is easily worked around by telling chatGPT that it’s code didn’t finish and tell it specifically where to start finishing the code.

Next, the code produced by chatGPT is most definitely not infallible. There were compiler errors in the generated code, sometimes from missing imports, sometimes from trying to import a module from the wrong package, and sometimes even syntactical errors.

Also, giving a slightly different prompt can lead to dramatically different code, sometimes with the errors from previous outputs magically fixed or different errors introduced.

Having said all this, the code was still nearly perfect and only required the smallest amount of tweaking to get working. Specifically with neural networks, though, there are a lot of “hyper-parameters” that you must specify. chatGPT picks these numbers and doesn’t give any reasoning behind it.

That being said, chatGPT does a good job of documenting what the code is doing with appropriate comments (it just didn’t include justification for some of the hyper-parameter values it picked – learning rate, number of epochs, batch size, etc…).

For my AI class, chatGPT saved a lot of time/effort googling around to find code tutorials. What potentially is lost, though, is all the explanation that goes along with those tutorials. chatGPT attempts to explain some of its code, and I managed to “accidentally” prompt in a way so that it gave a lot of explanation prior to generating any code. But then the code that was generated along with that explanation was easy to understand but had bad results. I tweaked my prompt to get chatGPT to produce a more complicated GAN and it omitted explanation but generated code that produced better results.

Notebooks referenced in this blog: