I blogged during an epic merge that took a couple weeks to complete in which I reconciled code changes between a development system and a production system which had diverged significantly over a period of a few years. Today is the day I’m going to try to tackle the corresponding data merge mentioned in that post. [Update: it looks like it’s going to take at least the entire weekend.]

Background

As mentioned in that previous post, my development server had diverged quite a bit from the production server. One of the features I was adding on the development server required my entire lifetime history of rides. Since that feature wasn’t available on my production server, I have not uploaded my older historical data obtained from other data sources (e.g., Eddie B. tracking posters in my basement plus old Microsoft Access databases I created in college) onto the production server. But I have carefully entered all that data onto the development server. Similarly, when traveling, I would frequently upload data to the production server, but forget to replicate any of that data onto the development server when I made it back home. So it’s a mess with some data only on development, some data only on production, and some data on both!

Here’s a quick glance at the data statistics on the two systems.

| Development | Production | |

| My total # of rides/routes: | 9101 / 9095 | 8711 / 8698 |

| My total mileage of rides: | 704415 (n/c) | 780874 (n/c) |

| Other user total # of rides: | 141 / 125 | 164 / 148 |

| Other user total mileage: | 11436 (n/c) | 20277 (n/c) |

| My total # of maps: | 632 / 58 | 596 / 61 |

| Other user total # of maps: | 35 / 0 | 39 / 1 |

| My total # of overlays: | 93 (n/c) | 20 (n/c) |

| Other user total # of overlays: | 0 (n/c) | 0 (n/c) |

| Total # of datasets: | 4415 (n/c) | 41480 (n/c) |

on development vs production pre/post clean-up.

3/11 2:21PM started to obtain these numbers before cleanup

3/12 10:39AM finished clean-up (described below).

Clean-up strategy

The first step prior to merging is to clean-up data on both systems:

- Delete zero distance routes

- Delete maps not connected to a file in the filesystem.

- Delete duplicate routes, maps, and overlays

(must be truly identical)

Merge strategy

While a pretty big challenge in and of itself, making sure the routes and maps are NOT duplicated and present on both systems (i.e., completely synced) is not the only challenge. I used the same database for keeping track of all the datasets I used to create the maps. These datasets have diverged quite a bit as well.

Reducing redundancy

The biggest challenge is making sure not to duplicate any data while also not losing any data. The following must all be equal for the ride/route to be considered identical: distance, route_date, and the related route_segments must be identical. If it passes this threshold, I’m going to consider the route identical even though there are many, many other attributes related to the ride/route that I am storing. The decision about which to keep is simple, keep the one that is already on production.

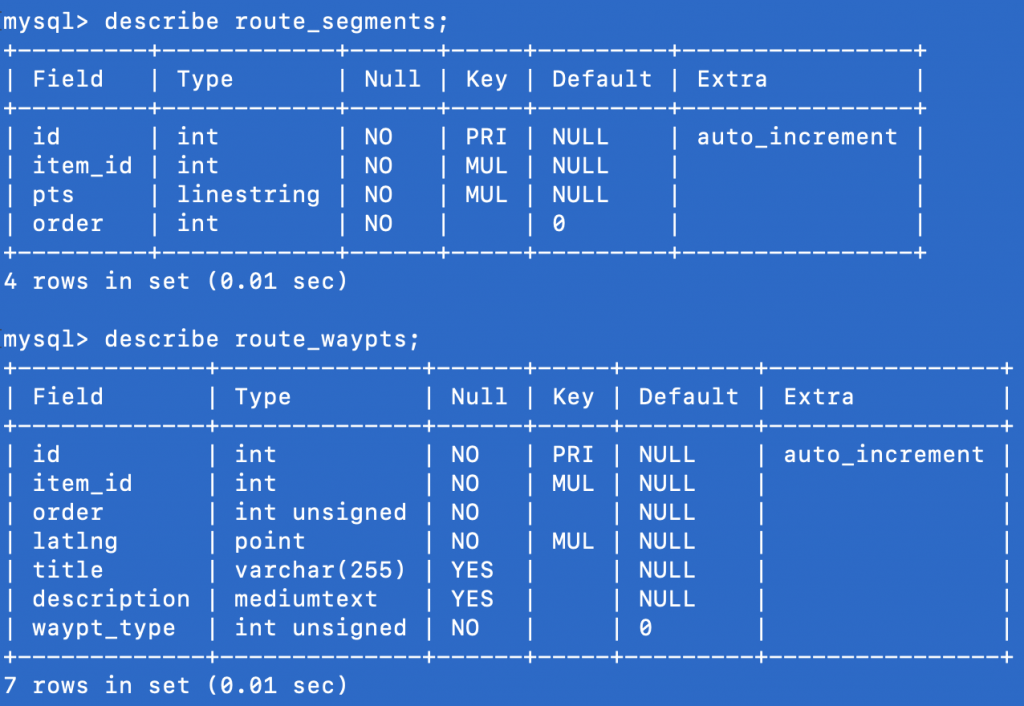

In addition to the key elements of a ride/route that make it unique described in the previous paragraph, there is much more data that I’m storing for rides and routes highlighted in the screenshots below:

The first challenge to overcome when performing the merge is that the databases are currently on two different systems. Several options in order of increasing difficulty:

- Export the test database and reimport under new name directly onto production

- Create a tunnel through the firewall on the test system to allow direct access from production to the test database

- Create a REST api for performing the queries on the test database through the webapp (which would also need to be tunneled)

I am going with the simplest approach as this will necessitate creating a backup of the test database (the export). So that if anything goes wrong, I’ve got a backup. I will also backup the production database before diving into the merge. Thankfully, I don’t have any active users right now on production so I do not have to worry about new data that comes in after the backup.

Despite the size, I ran the backups at the same time and they both took less than a minute to complete. 3/12 11:57AM

newtopodb.development.sql 1.28GB newtopodb.production.sql 1.48GB

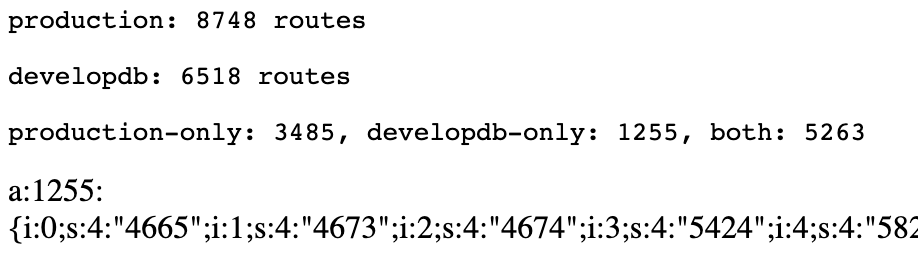

The first step for performing the merge is to assess the current data layout more fully by classifying what data resides ONLY on the development server, what data resides ONLY on the production server, and what data already exists on both. The screenshot below demonstrates the results. This was obtained after importing the exported development database onto production and then updating the production website with a script to analyze the contents of both databases.

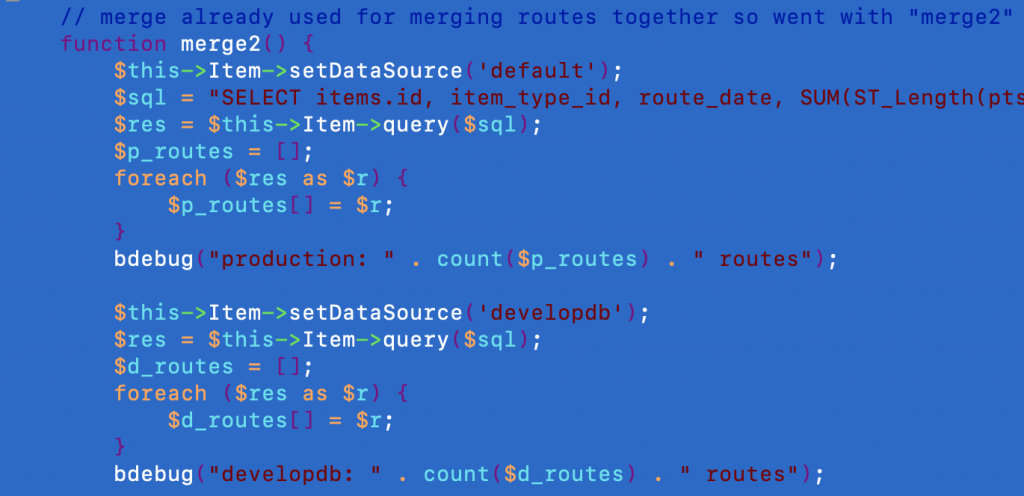

For cakePHP (even all the way back to version 2) you can call the setDataSource on your model at runtime to switch which database it pulls the data from. Here is a screenshot of the code that produced the previous screenshot analyzing the situation to determine which data resides where.

In an interesting discovery during this merge process, I determined that the distance data on duplicate rides on the production server is wrong. I also discovered that there were many development rides that were missing the route_segments entirely … about 3000 of them. I discovered the distance problem by accident when rides that were duplicates were not being matched as duplicates. An approximately 7.5 mile ride had a distance of 95,021 feet on the production server – which has no correlation in feet or any other units to the actual length of the ride. The same ride with the same date and same LINESTRING of lat/lng points from the ride had the correct distance of 39,332 feet on the development server. I might take this opportunity to convert all my distance measurements to metric, which is the generally accepted standard way to store distance data. 3/14 7:26AM

Success! (I hope). There’s no realistic way to know whether my scripts truly captured everything that was unique between the two without manually going through and looking at all the route data. I did my best and am confident enough about it to proceed. It is a good sign that all 1255 development-only routes were imported. But was the script correct in identifying which were actually duplicates? I spot-checked a bunch of them but there were supposedly over 5000 duplicates. I’m about to create some maps that should use ALL the routes that I imported. That should help identify whether anything obvious is missing. Also, I’m going to do some sorting and searching and manually look to see if I ended up creating any duplicate rides/routes unexpectedly. 3/15 8:50AM