One of my longest running projects with many thousands of users now is the website di2stats.com. I just added this message to the import page and disabled the import functionality so that I can migrate the database and file data to a new server.

I started the migration process this morning (which I will detail below) at 4:00AM when even the European users probably aren’t uploading rides yet. I anticipated a brief hour-long process since I am somewhat experienced in doing this sort of migration. What I did not anticipate was the number of errors I would run into because of the sheer amount of data that needed to be migrated.

All of the errors so far were related to the export. I tried to use mysqldump first, but it does not play well with a 40GB database. I kept getting max_allowed_packet errors. I re-ran the script, but the largest I could specify was 2GB … and that was apparently not large enough because it still gave me the same error … after 15 minutes of running.

I switched over to exporting from phpmyadmin. This seems like it was moving faster, but it wasn’t fast enough. I received an “execution time limit exceeded” error message. This was a fairly straightforward fix:

https://stackoverflow.com/a/13858161$cfg['ExecTimeLimit'] = 600;

Note that the answer isn’t exactly correct. On a Ubuntu / Debian system, you should make that change in the /etc/phpmyadmin/config.inc.php file instead of the one mentioned in the post. Also, you do not need to restart anything for this change to go into affect. It is re-read with every new web page request to phpmyadmin.

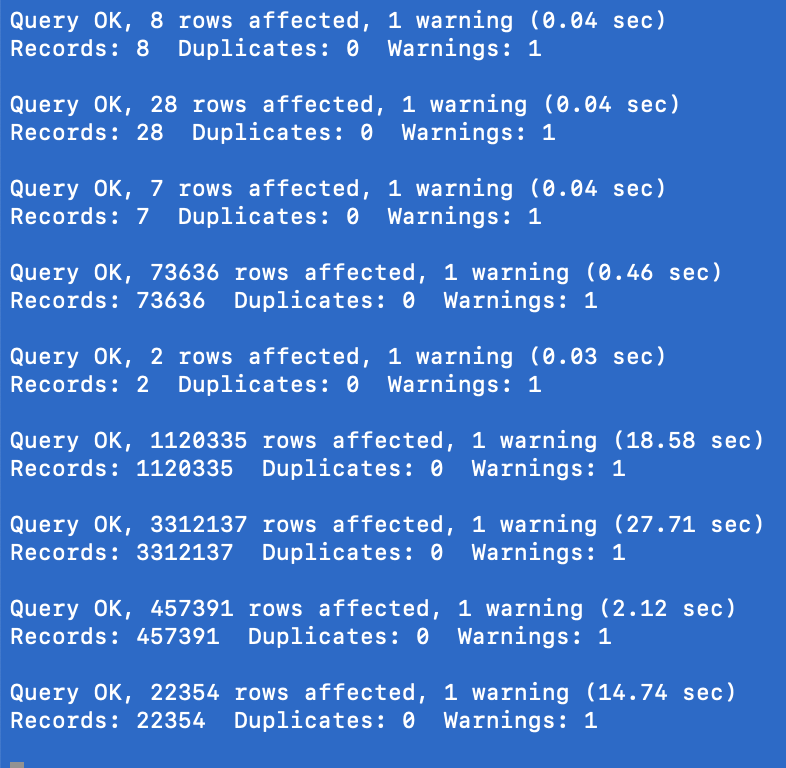

In about 30 minutes, I had exported and downloaded the entire 40GB database to my local server. I figured the import would be quick. I was wrong. It is still going a couple hours later.

I imagine this has to do with updating indexes after the insert. That SHOULD be included in the timing info. It’s possible that there is an option where I can see that. Or perhaps the long delay is something else.

Woohoo! Right as I went to pack up to leave to bike into work, the script finished right before my eyes as I checked on it! 9:13AM

Domain redirecting properly now.

File paths resolved.

Timezone API key updated … time is hard.

Done! Back up and running. All tests passed. 9:40AM

1 comment