One of the HTML5 features introduced that I have not needed until now is something called “server-sent events“. It’s a strange name which I think is derived from the HTTP content-type text/event-stream which must be set on the server side script generating the events.

This isn’t quite the same as web push notifications which are a bit more invasive by requiring the web browser to keep a service running in the background at all times to deliver push notifications. It’s a great feature, but it’s also part of feature creep that just slows down computers over time as more and more services are constantly running in the background.

Server-sent events, on the other hand, are only active while the browser is still loaded with the tab containing the client-side script still open (but not necessarily in the foreground) to receive the events. I have been writing this blog post in a different tab while my server-sent events continue to be delivered to another tab running the script I document below. Closing the browser (or just the tab), closes the connection to the web server and terminates the script on the server side. This can be dangerous if the server script was only partially finished with its work. For my purposes, this would leave a ride partially processed. My script would then pick back up later with the same ride, re-process the entire ride, and duplicate some of the partially processed data. I have been careful to manually delete “passes” from partially processed rides before restarting the script as I ironed out all the bugs. Because of the potential for some duplicate data to have slipped through, however, I am already planning on writing a script to check for duplicate passes in the db after this analysis script finishes as part of a larger “data integrity and validation” effort before publishing these results in a journal later this summer.

Here is simple experimental code to demonstrate Server-Sent Events … first the server script sse.php:

<?php

date_default_timezone_set("America/Chicago");

header("Content-Type: text/event-stream");

while (1) {

$now = date("Y-m-d H:i:s");

$msg = 'data: message at time ' . $now . "\n\n";

echo $msg;

// write to file to see if server is still executing after tab is closed

file_put_contents('data.txt',$msg,FILE_APPEND);

// flush the output buffer and send echoed messages to the browser

while (ob_get_level() > 0) {

ob_end_flush();

}

flush();

// pause

sleep(1);

}

And now the corresponding client side web page sse.html:

<html>

<head>

<meta charset="UTF-8">

<title>Server-sent demo</title>

</head>

<body>

<button>Close the connection</button>

<ul>

</ul>

<script>

var button = document.querySelector('button');

var evtSource = new EventSource('sse.php');

console.log(evtSource.withCredentials);

console.log(evtSource.readyState);

console.log(evtSource.url);

var eventList = document.querySelector('ul');

evtSource.onopen = function() {

console.log("Connection to server opened.");

};

evtSource.onmessage = function(e) {

var newElement = document.createElement("li");

newElement.textContent = "message: " + e.data;

eventList.appendChild(newElement);

};

evtSource.onerror = function() {

console.log("EventSource failed.");

};

button.onclick = function() {

console.log('Connection closed');

evtSource.close();

};

</script>

</body>

</html>

First, credit to: https://github.com/mdn/dom-examples/tree/master/server-sent-events although I did have to fix some bugs on the php script before it would run.

Some key things to point out:

- It looks like you have to send any initial data to the server via a query string at the time of instantiating the EventSource object.

- If you close out the tab while the connection is still open, the “data.txt” file doesn’t continue to grow … meaning that the php script is no longer actively running (and presumably) removed from memory.

- You can manually close out the connection using the .close() method called on the EventSource object.

- Note the “colon separated” key-value nature of a single message and the double newlines (i.e., blank line) to end a single message.

- UPDATE: php locks the session, which will prevent you from connecting in another tab to the same domain name (if you need to access the session for authentication). Simple solution is to call “session_write_close()” immediately after authentication and before doing the heavy lifting on the server. You can do this safely in most cases because it’s unlikely you need the session once you authenticate. Note that this doesn’t log you out or invalidate the session. It just releases the lock on the session file.

- UPDATE: sending across a new serversent event does NOT reauthenticate your session (at least in codeigniter). You have to send a simple ajax request to any page and ignore the result if you want to stay logged in … theoretically you should be able to increase the session time out to stay logged in, but not matter what I change (.user.ini for http2 configuration) or calls to ini_set, I cannot get my codeigniter session to last more than the default 20 minutes.

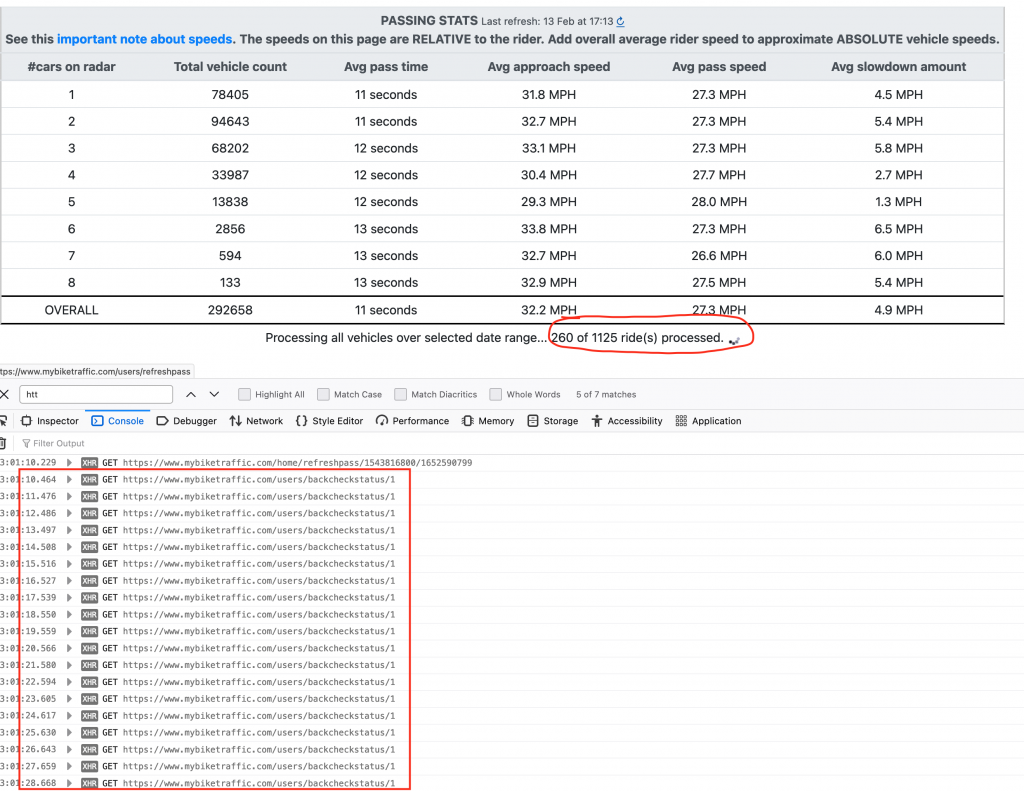

How is this useful? Consider the traditional progress bar. If using AJAX (XmlHttpRequest) to poll for updates, the client script must repeatedly poll the server, which might mean complicating or splitting up long-running server scripts into stages … unrolling a long loop, for example, or writing out to a status file that would be polled as long as an open connection is still available.

An alternative is using Web Workers to avoid polling, but if simply displaying a progress bar, you do not need to take any advantage of two way communication. You only need one way communication from the server to update the client. This is the niche that Server-Sent Events fills so that you don’t have to deal with the complexity of managing WebWorkers.

I’m in the middle of performing a major database operation on my mybiketraffic.com website. Previously, I was limited by how big the database could grow since I was paying for hosting that limited the size of the database. Now that I am self-hosting, I am not limited to the database size. So some of my analysis data I was dumping into JSON files and simply re-running the scripts on all the JSON files whenever I needed to see results. This was already taking forever, but now that I have many thousands of users who have uploaded over 1M kilometers of rides, it is becoming unbearable to re-run the same exact script performing the same exact calculations on the same exact data! Talk about inefficient!

So I am now updating the script to store the results of the analysis so that I don’t have to re-analyze the same rides over and over again. This will vastly increase the size of the database, but I am not limited by storage size. The potential problem is performing regular online backups becomes more and more difficult as the database size gets larger and larger.

Also, I will run the small analysis script on each new ride as it is uploaded so that the results will be ready almost immediately and available to users to see more summary information about their own rides without having to wait for a long analysis script to run on all of their rides, which previously would take longer and longer the more rides they upload. Analysis of the summary data on all my rides takes tens of minutes now.

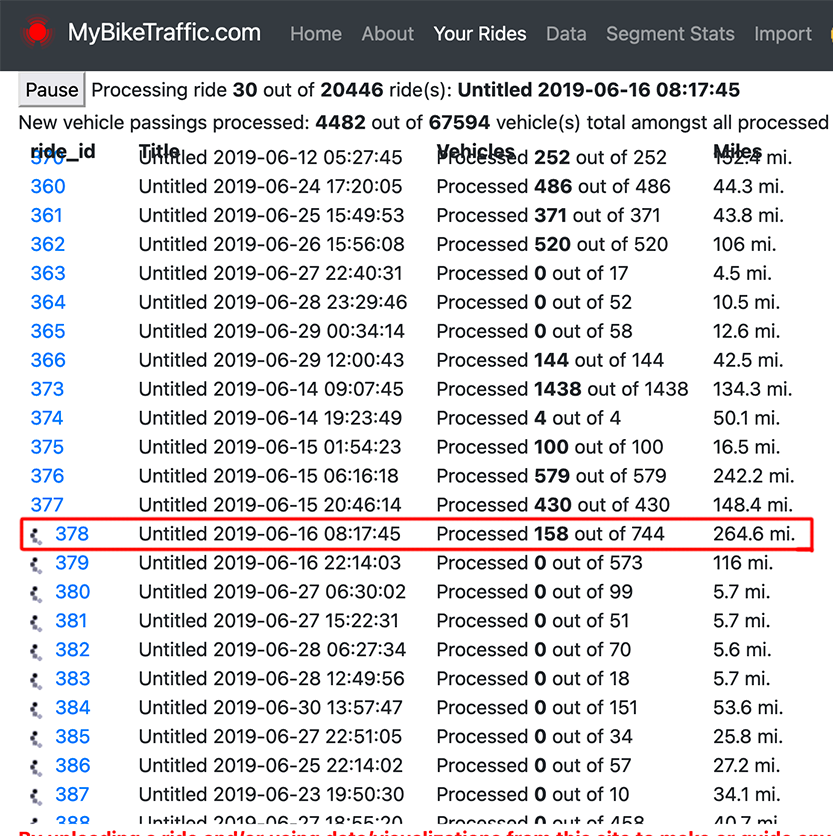

This leads to the updated script below that I modified to take advantage of Server-Sent events to display better progress during the processing of each ride. It was taking about a minute per ride to process the ride with no progress reported until the ride was done. It was going to take a lot of work to split the server-side script up even farther by unrolling the loop that processes all the vehicle passings in the ride. Now, with Server-Sent events, I can more easily send back events as each vehicle passing is processed to update the UI so that I have nice UI updates over a script that could take an entire week to run.

var evtSource = false;

// use server-event messages to update progress within single ride

function processRide() {

let ride = rides[ridecnt++];

ride['vprocessed'] = 0;

let rideid = ride['id'];

evtSource = new EventSource(site_url + "rides/process/"+rideid);

evtSource.onopen = function() {

console.log("Connection to server opened for " + evtSource.url);

};

evtSource.onmessage = function(e) {

let jsondata = JSON.parse(e.data);

if (jsondata.finished==1) {

$("#busy"+(ridecnt-1)).hide();

// ride completely finished processing, see if we should start another one

evtSource.close();

if (!stopflag) {

processRide();

}

} else {

// just a vehicle passing, update the ways table and stats appropriately

ride['vprocessed'] += 1;

$("#vehiclesprocessed"+(ridecnt-1)).html(ride['vprocessed']);

updateWays(jsondata, rideid);

}

};

evtSource.onerror = function() {

console.log("EventSource failed.");

};

}

$(document).ready(function() { processRide(); });

In addition to the easier, quicker results analysis already mentioned, this script has helped me find old rides that are missing the JSON pre-processed data file. I can easily see those rides, delete the ride, and then re-upload the original data file again.

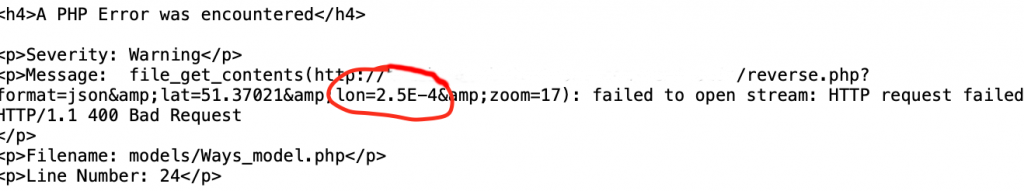

One last note, this has been a tricky script to shepherd through to completion, which is great because it demonstrates the worldwide usage of the website, which means I have collected all kinds of data from all over the world … including those very, very close to the equator and prime meridian. This is a problem as evidenced below: